Zero-Shot Tokenizer Transfer

Language models (LMs) are bound to their tokenizer, which maps raw text to a sequence of vocabulary items (tokens). This restricts …

Dynamic Memory Compression: Retrofitting LLMs for Accelerated Inference

Transformers have emerged as the backbone of large language models (LLMs). However, generation remains inefficient due to the need to …

Scaling Sparse Fine-Tuning to Large Language Models

Large Language Models (LLMs) are difficult to fully fine-tune (e.g., with instructions or human feedback) due to their sheer number of …

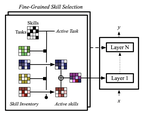

Combining Modular Skills in Multitask Learning

A modular design encourages neural models to disentangle and recombine different facets of knowledge to generalise more systematically …

Visually Grounded Reasoning across Languages and Cultures

The design of widespread vision-and-language datasets and pre-trained encoders directly adopts, or draws inspiration from, the concepts …

Modelling Latent Translations for Cross-Lingual Transfer

While achieving state-of-the-art results in multiple tasks and languages, translation-based cross-lingual transfer is often overlooked …

Composable Sparse Fine-Tuning for Cross-Lingual Transfer

Fine-tuning all parameters of a pre-trained model has become the mainstream approach for transfer learning. To increase its efficiency …

XCOPA: A Multilingual Dataset for Causal Commonsense Reasoning

In order to simulate human language capacity, natural language processing systems must complement the explicit information derived from …

Multi-SimLex: A Large-Scale Evaluation of Multilingual and Cross-Lingual Lexical Semantic Similarity

We introduce Multi-SimLex, a large-scale lexical resource and evaluation benchmark covering datasets for 12 typologically diverse …

Parameter Space Factorization for Zero-Shot Learning across Tasks and Languages

Most combinations of NLP tasks and language varieties lack in-domain examples for supervised training because of the paucity of …

Towards Zero-shot Language Modeling

Can we construct a neural language model which is inductively biased towards learning human language? Motivated by this question, we …

Cross-lingual Semantic Specialization via Lexical Relation Induction

Semantic specialization integrates structured linguistic knowledge from external resources (such as lexical relations in WordNet) into …

Informing Unsupervised Pretraining with External Linguistic Knowledge

Unsupervised pretraining models have been shown to facilitate a wide range of downstream applications. These models, however, still …

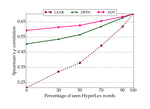

Specializing Distributional Vectors of All Words for Lexical Entailment

Semantic specialization methods fine-tune distributional word vectors using lexical knowledge from external resources (e.g., WordNet) …

Modeling Language Variation and Universals: A Survey on Typological Linguistics for Natural Language Processing

Linguistic typology aims to capture structural and semantic variation across the world’s languages. A large-scale typology could …

Adversarial Propagation and Zero-Shot Cross-Lingual Transfer of Word Vector Specialization

Semantic specialization is the process of fine-tuning pre-trained distributional word vectors using external lexical knowledge (eg, …