I am an assistant professor in Natural Language Processing at the University of Edinburgh and a visiting professor at NVIDIA.

If you are a prospective PhD student or postdoc please visit the FAQ page for more information.

My research focuses on:

-

adaptive memory and tokenization in foundation models (see my NeurIPS 2024 tutorial on dynamic sparsity): I aim to redefine the units of computation of foundation models by adaptively compressing the sequences of their hidden representations and memory. This allows models to tokenize raw, modality-agnostic data end-to-end, learning hierarchical abstractions. Simultaneously, it provides the foundations for permanent model memories and inference-time hyper-scaling.

-

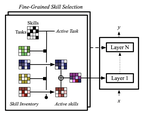

modular deep learning: I am interested in designing neural architectures that route information to specialised modules (e.g., sparse subnetworks). This facilitates systematic generalisation and conditional computation.

-

computational typology: I wish to understand how languages vary, across the world and its cultures, within a computational framework. Multimodal models in particular give us an powerful tool to study how form depends on grounded, embodied representations of meaning and function.

Currently, my research is supported by the ERC Starting Grant “Adaptive Tokenization and Memory in Foundation Models for Efficient and Long-Horizon AI”, the ARIA grant “Tracking Evolving AI and Compute”, and various gifts from Google DeepMind, NVIDIA, and NatWest.

Previously, I was a visiting postdoctoral scholar at Stanford University and a postdoctoral fellow in computer science at Mila - Quebec AI Institute in Montreal. In 2021, I obtained a PhD from the University of Cambridge, St John’s College. Once upon a time I studied modern literature at the University of Pavia. Deep in my heart, I am still a humanist: some of my favourite writers are Italo Calvino, Ursula Le Guin, and Titus Lucretius Carus.

My research has been featured on the Economist and Scientific American, among others. I received a Google Research Faculty Award, 2 Highlight Awards at ACL 2025, and 2 Best Paper Awards at EMNLP 2021 and RepL4NLP 2019. I am a board member of SIGTYP, the ACL special interest group for computational typology, a Scholar of the European Lab for Learning and Intelligent Systems (ELLIS), and part of the TACL journal editorial team.